Runge-Kutta Methods

Discussion

Euler's method and the improved Euler's method are the simplest examples of a whole family of numerical methods to approximate the solutions of differential equations called Runge-Kutta methods. In this section we will give third and fourth order Runge-Kutta methods and discuss how Runge-Kutta methods are developed. Euler's method and the improved Euler's method both try to approximate $y(x_0+h)$ by approximating the slope $m$ of the secant line from $(x_0,y(x_0))$ to $(x_0+h,y(x_0+h))$ and using the formula $y(x_0+h)=y(x_0)+mh$ (see figure 1).

Third Order Runge-Kutta Method

To approximate the solution of $$ \frac{dy}{dx}=f(x,y),\qquad y(x_0)=y_0 $$ compute $$ \begin{align} x_1&=x_0+h \\ k_1&=f(x_0,y_0) \\ k_2&=f(x_0+h,y_0+hk_1) \\ k_3&=f(x_0+h/2,y_0+(h/2)(k_1+k_2)/2) \\ y_1&=y_0+\frac{k_1+k_2+4k_3}{6}h \end{align} $$ Then $y(x_1)\approx y_1$. This method uses the improved Euler method to find an approximate midpoint of the secant line and then takes a weighted average of the slopes at the left and right endpoints and the midpoint. Note that if $f(x,y)$ is just $f(x)$, a function of $x$ alone, then solving the differential equation $\displaystyle\frac{dy}{dx}=f(x)$ is just evaluating the integral $\displaystyle\int_{x_0}^{x_1} f(x)\quad dx$. In this case, the third order Runge-Kutta method is the same as Simpson's rule for numerical approximation of integrals from Calculus 2. Just as for Euler's method and the improved Euler's method, the RK3 method can be easily put in a spreadsheet. The first part of a spreadsheet for the RK3 method applied to $\displaystyle\frac{dy}{dx}=2xy$, $y(0)=1$ is shown below. Note that this is the problem for exercise 1, so you can use this spreadsheet to check your work.

Fourth Order Runge-Kutta method

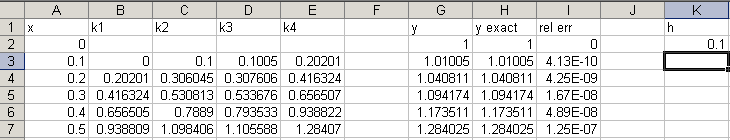

To approximate the solution of $$ \frac{dy}{dx}=f(x,y),\qquad y(x_0)=y_0 $$ compute $$ \begin{align} x_1&=x_0+h \\ k_1&=f(x_0,y_0) \\ k_2&=f(x_0+h/2,y_0+(h/2)k_1) \\ k_3&=f(x_0+h/2,y_0+(h/2)k_2) \\ k_4&=f(x_0+h,y_0+hk_3) \\ y_1&=y_0+\frac{k_1+2k_2+2k_3+k_4}{6}h \end{align} $$ Then $y(x_1)\approx y_1$. Just as with Euler's method and the improved Euler's method, we can repeat the process to find an $x_2=x_1+h=x_0+2h$ and $y_2\approx y(x_2)=y(x_0+2h)$ and so on. The fourth order Runge-Kutta method listed above is powerful enough that it was a popular technique for practical computation (and not just for educational purposes). The steps are well suited to programming into a computer, just loop through the basic algorithm as many times as needed to get to the values you are interested in. The first part of a spreadsheet for the RK4 method applied to $\displaystyle\frac{dy}{dx}=2xy$, $y(0)=1$ is shown below. Note that this is the problem for exercise 2, so you can use this spreadsheet to check your work.

Exercises

- Use the third order Runge-Kutta method with step sizes $h=0.1$ and $h=0.05$ to approximate $y(2)$ for the initial value problem $\displaystyle\frac{dy}{dx}=2xy$, $y(0)=1$. Find the error in both approximations.

- Use the fourth order Runge-Kutta method with step sizes $h=0.1$ and $h=0.05$ to approximate $y(2)$ for the initial value problem $\displaystyle\frac{dy}{dx}=2xy$, $y(0)=1$. Find the error in both approximations.

- Show that approximating $y(b)$ using one step of the third order Runge-Kutta method for the initial value problem $\displaystyle\frac{dy}{dx}=f(x)$, $y(a)=y_0$ gives the same formula as Simpson's rule for $\displaystyle\int_a^b f(x)\quad dx.$ Simpson's rule is covered in your calculus textbook (or, if you don't have your book any more, either Wikipedia or Wolfram MathWorld have reasonable explanations).

Runge-Kutta-Fehlberg Methods

Discussion

The trickiest part of using Runge-Kutta methods to approximate the solution of a differential equation is choosing the right step-size. Too large a step-size and the error is too large and the approximation is inaccurate. Too small a step-size and the process will take too long and possibly have too much roundoff error to be accurate. Furthermore, the appropriate step-size may change during the course of a single problem. Many problems in celestial mechanics, chemical reaction kinematics, and other areas have long periods of time where nothing much is happening (and for which large step-sizes are appropriate) mixed in with periods of intense activity where a small step-size is vital. What we need is an algorithm which includes a method for choosing the appropriate step-size at each step. The Runge-Kutta-Fehlberg methods do just this, which is why they have largely replaced the Runge-Kutta methods in practice. Fehlberg's improvement to the Runge-Kutta method is based on two assumptions.- The error in a single step of the improved Euler's method is about $C'h^3$ and the error in a single step of the third order Runge-Kutta method is about $C''h^4$ where $C'$ and $C''$ are constants that depend on the problem but not the step size. (The error in a single step is the error in $y(x_0+h)$ computed with a step-size of $h$. It is also called the local error.)

- $C''h^4$ is much smaller than $C'h^3$.

Example

Approximate $y(1)$ if $dy/dx=x+y$, $y(0)=0$ using the RK2(3) method with tolerance $T=0.01$. (This tolerance is rather large, but I want to work things out by hand so I don't want to take too many steps.) We need to pick an initial step-size to get things started. We have to go from $x_0=0$ to $x=1$ so why not go for it all in one shot and guess $h=1$ initially. Actually, the reason not to use that large a step-size for the beginning is that it is usually too large and often takes a couple of iterations before you get the step-size down to a reasonable size and actually get the process off and running. Choosing a step-size of about the length of the interval divided by 16 or 32 is more typical. But I wanted to be sure I had to reject the estimate sometime in the course of the example so I decided to start off wrong with too large a step size to be sure that happened. We carry out the following computations. $$\begin{align} k1&=0\\ k2&=1\\ k3&=.75\\ y_1'&=.5\\ y_1''&=.666666\ldots\\ |y_1'-y_1''|&=.166666\ldots>.01 \end{align}$$ The estimated error is greater than the tolerance $.01$ so we reject the initial step-size of $h=1$. We compute a new step-size and try again. $$ \begin{align} h_{\text{new}}&=.9*1*(.01/.166666\ldots)^{1/3}=.3523380877\\ k1&=0\\ k2&=.35233880877\\ k3&=.2072045759\\ y_1'&=.062071064\\ y_1''&=.069361064\\ |y_1'-y_1''|&=.00729<.01 \end{align}$$ This time the estimated error is less than the tolerance so we accept the step-size and estimate and compute $$\begin{align} x_1&=x_0+h=.3523380877\\ y_1&=y_1''=.069361064 \end{align}$$ We now compute a new step-size $h_{\text{new}}$ and go on to the next step (Twice in this problem, we compute a new step-size and it turns out to exactly equal the old step-size. This is a freak accident. I couldn't have planned that to happen if I tried.) $$ \begin{align} h_{\text{new}}&=.9*.3523380877*(.01/.00729\ldots)^{1/3}=.3523380877\\ k1&=.4216991517\\ k2&=.9276179121\\ k3&=.7162817215\\ y_2'&=.3061881158\\ y_2''&=.3165523026\\ |y_2'-y_2''|&=.0103641868>.01 \end{align}$$ This time the estimated error is greater than the tolerance so we reject the step-size and try again with the same $x_1$ and $y_1$. $$ \eqalign{ h_{\text{new}}&=.9*.3523380877*(.01/.0103641868)^{1/3}=.3133456655\cr k1&=.4216991515\cr k2&=.8671824185\cr k3&=.6793383478\cr y_2'&=.2712937907\cr y_2''&=.2785837907\cr |y_2'-y_2''|&=.00729<.01\cr }$$ The estimated error is less than the tolerance so we accept the step-size and compute $$\eqalign{ x_2&=x_1+h=.6656837532\cr y_2&=y_2''=.2785837907\cr }$$ We now compute the new step-size for the next step and repeat the process. $$ \eqalign{ h_{\text{new}}&=.9*.3133456655*(.01/.00729)^{1/3}=.3133456655\cr k1&=.9442675439\cr k2&=1.553495351\cr k3&=1.296606171\cr y_3'&=.669915379\cr y_3''&=.679849358\cr |y_3'-y_3''|&=.0099695568<.01\cr }$$ The estimated error is less than the tolerance so we accept the estimate and compute $$\eqalign{ x_3&=x_2+h=.9790294187\cr y_3&=y_3''=.679849358\cr }$$ We now compute the step-size for the next step $$h_{\text{new}}=.9*.3133456655*(.01/.0099695568)^{1/3} =.2822978588$$ But this step-size is too large since $x_3+h=1.261327277>1$ and so it would put us past our final value for $x$. Therefore we shrink $h$ to hit $x=1$ exactly. $$ \eqalign{ h_{\text{new}}&=1-x_3=.0209705813\cr k1&=1.658914354\cr k2&=1.714673334\cr k3&=1.687086169\cr y_4'&=.7152579833\cr y_4''&=.7152620701\cr |y_4'-y_4''|&=.00000408681<.01\cr }$$ The estimated error is less than the tolerance so we accept this estimate and get the final computations $$\eqalign{ x_4&=x_3+h=1\cr y_4&=y_4''=.7152620701\cr }$$ So our approximation for $y(1)=y(x_4)\approx y_4=.7152620701$. The true value is $y(1)=.7182818285$ and the error is .0030197584 or a percent error of 0.42%. You may be wondering how we got a relative error of less than 1% when we had a tolerance of 1% error in each individual step. The trick is that we estimate the error using the less accurate improved Euler's method but use the estimates from the more accurate RK3 method. That way we have a safety margin for our estimate of the error. There are many different Runge-Kutta-Fehlberg methods, all of which involve comparing two different Runge-Kutta approximations to get an estimated error and step-size. The RK2(3) method is a low order method which gives moderately accurate results with only 3 function evaluations at each step. If high accuracy is important, you should use a higher order method. On the other hand, if you just need an answer to within 1% for a problem that doesn't cover too large a range of $x$ values, the RK2(3) method is quick, cheap and effective (with the recommended tolerance of $T=.001$).Exercises

- In the lab you discovered halving the step size reduced the error in the improved Euler method by a factor of about 4. Explain why this fits with the global error being proportional to $h^2$ for the improved Euler method. Note, this is why it is called a second order method and denoted with the 2 in naming the RKF23 method)

- In the ingredients for the Runge-Kutta-Fehlberg method, we assume the local error of the improved Euler's method is about $C'h^3$. How does this fit with the error of the approximation for $y(1)$ being about $k'h^2$ (which is what it means for the error to be proportional to $h^2$)? Hint: How many steps of size $h$ does it take to get from $x=0$ to $x=1$?

- Explain why you are guaranteed that if the estimated error is greater than the tolerance, so you reject the estimate, the revised step-size will be smaller than the previous step-size (i.e. $ h_{\text{new}}<h$). If the estimated error is less than the tolerance, so you accept the estimate, will the revised step-size necessarily increase for the next step? For the next two problems you may want to program the RK2(3) method into a calculator or computer. Unfortunately, unlike the first extra credit assignment, this method isn't well suited to a spreadsheet because you keep having to test the step-size and possibly change it (perhaps more than once) at each step, though some students have used a spreadsheet successfully anyway.

- Approximate $y(2)$ using the RK2(3) method for $y'=2xy$, $y(0)=1$ and a tolerance of $T=.001$. You can use whatever initial step-size you want. Then compute the exact answer for the initial value problem and find the percent error in the RK2(3) method.

- Approximate $y(2)$ using the RK2(3) method for $y'=x+y$, $y(0)=0$ with a tolerance of $T=.001$.

If you have any problems with this page, please contact bennett@ksu.edu.

©1994-2025 Andrew G. Bennett